Most folks install and stop. Don’t. The dashboard shows LLM cost, developer time saved, and prompt health—with no prompt content stored.

pip install docoreai

export DOCOREAI_KEY=YOUR_API_KEY # Windows: set DOCOREAI_KEY=...

python -m docoreai start # starts local agent & telemetry

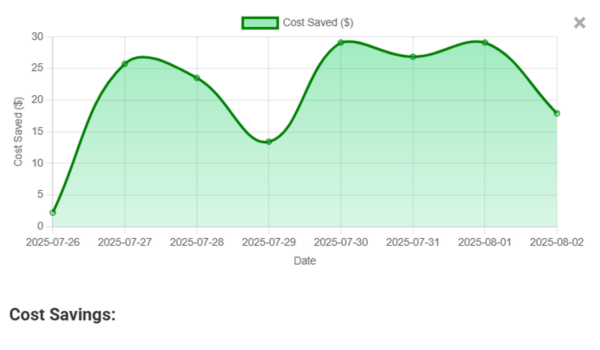

See Cost Savings Over Time

Spot waste, compare models, and track the impact of temperature tuning on your spend.

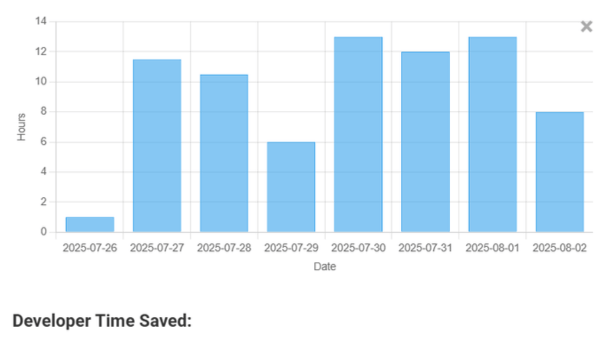

Quantify Developer Time Saved

Fewer retries and faster outputs translate to real hours back per engineer each month.

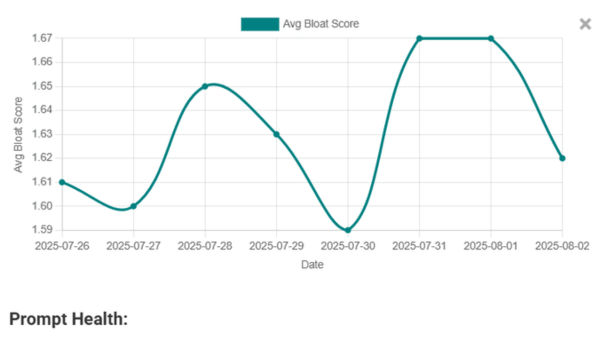

Measure Prompt Health

Track over-generation (bloat) and determinism to cut token waste without hurting quality.

Works with OpenAI & Groq today. More providers coming soon.

📉 What We Noticed

- ✅ Devs install the CLI tool

- ✅ They run 1–2 prompts

- ❌ But they never check the dashboard reports

That's like installing Google Analytics but never logging in. You're doing the work without seeing what's working.

🧪 What You’re Missing

Run this:

> docoreai start - run your LLM prompts as usual > docoreai dash

Then get charts showing:

- ✅ Developer Time Saved

- ✅ Token Usage and Cost

- ✅ Temperature Trends

- ✅ Prompt Success Rate

Your prompts are never stored. Reports are generated anonymously.

One-line CLI run

Insightful Charts: Developer Time Saved, Cost, Time Distribution

⚙️ Getting Started

Install and run in minutes:

> pip install docoreai -add token to .env file > docoreai start > docoreai dash

The dashboard opens automatically in your browser.

🔐 About Privacy

We respect your data. DoCoreAI never logs your raw prompts. Only anonymous usage metric stats are collected — if you opt in.

Do you want to help improve DoCoreAI by enabling anonymous analytics? (y/N)

That’s it. Full transparency. Toggle off anytime.

🙏 Like it? Help us out

If you found this useful, please share this post on Reddit, Twitter, Hacker News, or with a friend building AI tools. Let’s make prompt optimization measurable — not magic.

Pingback: LLM Cost Analytics Dashboard: Metrics, Layout & ROI | DoCoreAI

Pingback: Analyze GPT Prompt Efficiency: Cut Token Waste Without Losing Quality