How to Reduce LLM Cost with Prompt Tuning (Step-by-Step)

Your AI Bill is Out of Control. Here's the Fix.

Most teams waste 40-60% of their LLM budget on:

- Bloated prompts (820 tokens → 320 tokens = 60% savings)

- Retry loops from unclear instructions

- Over-long outputs nobody reads

This guide shows exactly how to fix it - with real examples from engineering teams.

What actually drives your LLM cost?

Two silent multipliers inflate spend: over-long outputs (token bloat) and retries when responses miss the mark. If you’d like to see these in context, the demo dashboard shows cost, time saved, and prompt health with sample data. For an executive roll‑up, skim the LLM cost dashboard overview.

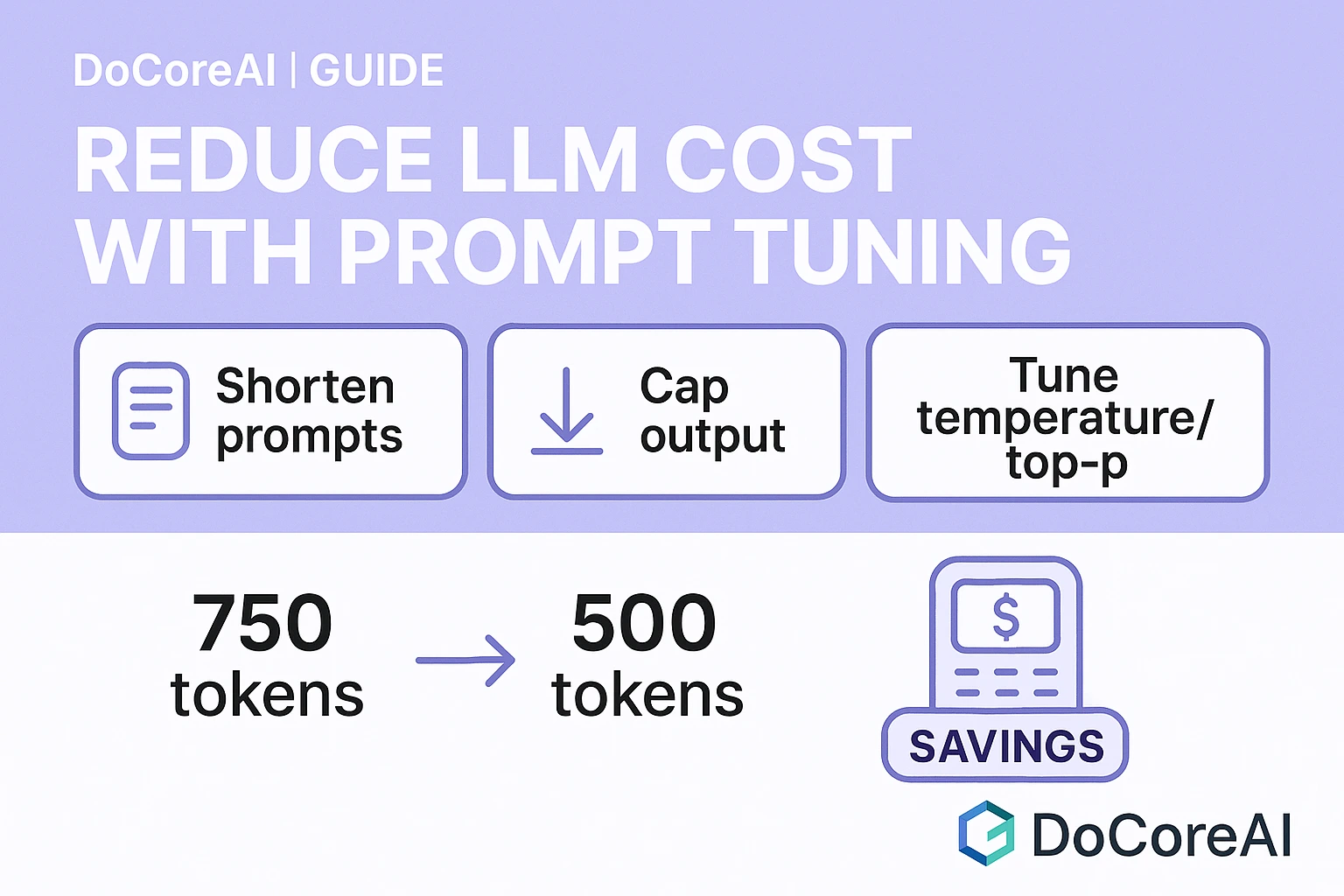

6 prompt-tuning levers that reliably cut cost

1) Shorten system & user prompts

Merge rules, remove filler, and avoid repeating context. Refer to shared docs instead of pasting long excerpts.

Before (example)

System: You are a helpful assistant... (220 tokens of rules)

User: Please summarize the following 3 pages... (600 tokens)~820 tokens

After (example)

System: Summarize concisely for a product manager (≤120 words).

User: Summarize the linked doc. Focus: risks, dates, owners.

Context: https://example.com/spec~320 tokens (≈60% fewer)

Tip: keep a short, reusable system prompt. For more patterns, our prompt analytics guide breaks down token usage by prompt type. Also see the prompt efficiency primer.

2) Set explicit output length & format

Tell the model how short and in what shape to answer.

“Answer in ≤100 words.”

“Return strict JSON with 5 fields: {title, risk, owner, date, status}.”

“Stop when you list 5 items.”This reduces token bloat and trims trailing chatter.

3) Tune temperature or top-p (one knob at a time)

Lowering temperature or top-p reduces tangents and retries. Adjust only one to avoid unpredictable interactions.

Not sure where to start? Our guide on best temperature settings shows ranges by task.

4) Use structured tasks & tool-calling

When the job is extract/transform, tools or explicit schemas constrain output and reduce rambling.

“Extract fields → return compact JSON.

If a field is missing, return null; do not invent values.”5) Tighten few-shot examples

Use 1–2 minimal, high-signal examples instead of long transcripts.

# Good

Example input → {"title":"Late shipment","risk":"medium","owner":"Ops"}

Example output → {"ok":true,"escalate":false}Pair few‑shots with a consistent template; see the prompt efficiency primer.

6) Stop sequences & streaming

Set stop tokens (e.g., \n\n or closing brace) for structured outputs; stream UI can stop rendering once enough is shown.

Estimate savings from tuning

Adjust a few inputs to see the effect. For real charts using your telemetry, open the demo dashboard.

Supported today: OpenAI & Groq. More providers coming soon.

Verify improvements with a simple A/B check

- Pick 2 common tasks (e.g., a summary and a JSON extract).

- Run 20 calls each before and after tuning.

- Compare total tokens, retry %, and mean latency.

Our demo dashboard visualizes cost, token bloat, prompt health, and developer time saved so managers can review outcomes quickly.

Prove ROI in 1 Week

Common pitfalls (and quick fixes)

- Over-constraining can reduce accuracy — watch success rate and loosen limits if needed.

- Too many few-shots bloat tokens — keep only 1–2 tight examples.

- Both temp & top-p high — tune one knob at a time.

If you’re rolling this out across a team, check the pricing page for plan limits and export options.