A short technical breakdown of the GenAI Production Blind Spot — the same concept in our video explainer

Why GenAI Teams Lose Cost & Latency Visibility After Going to Production

And why traditional logs, traces, and cloud metrics stop working once LLMs are in the loop.

For senior engineers, AI architects, and platform owners.

Free plan • No credit card • No prompts stored

The Production Blind Spot

When GenAI systems move from experiments to production, teams gradually lose visibility into token usage, retries, latency variance, and cost drift — even when application code hasn’t changed.

- Token usage increases without obvious code changes

- Latency becomes inconsistent across similar requests

- Costs drift quietly and surface only in invoices

- Failures appear as retries, fallbacks, or degraded responses — not errors

Why Existing Observability Stops Working

LLM-driven systems behave probabilistically. Cost and performance are driven by execution patterns — retries, token expansion, model routing, and temperature effects — not deterministic code paths.

This creates a visibility gap between application observability and AI system behavior.

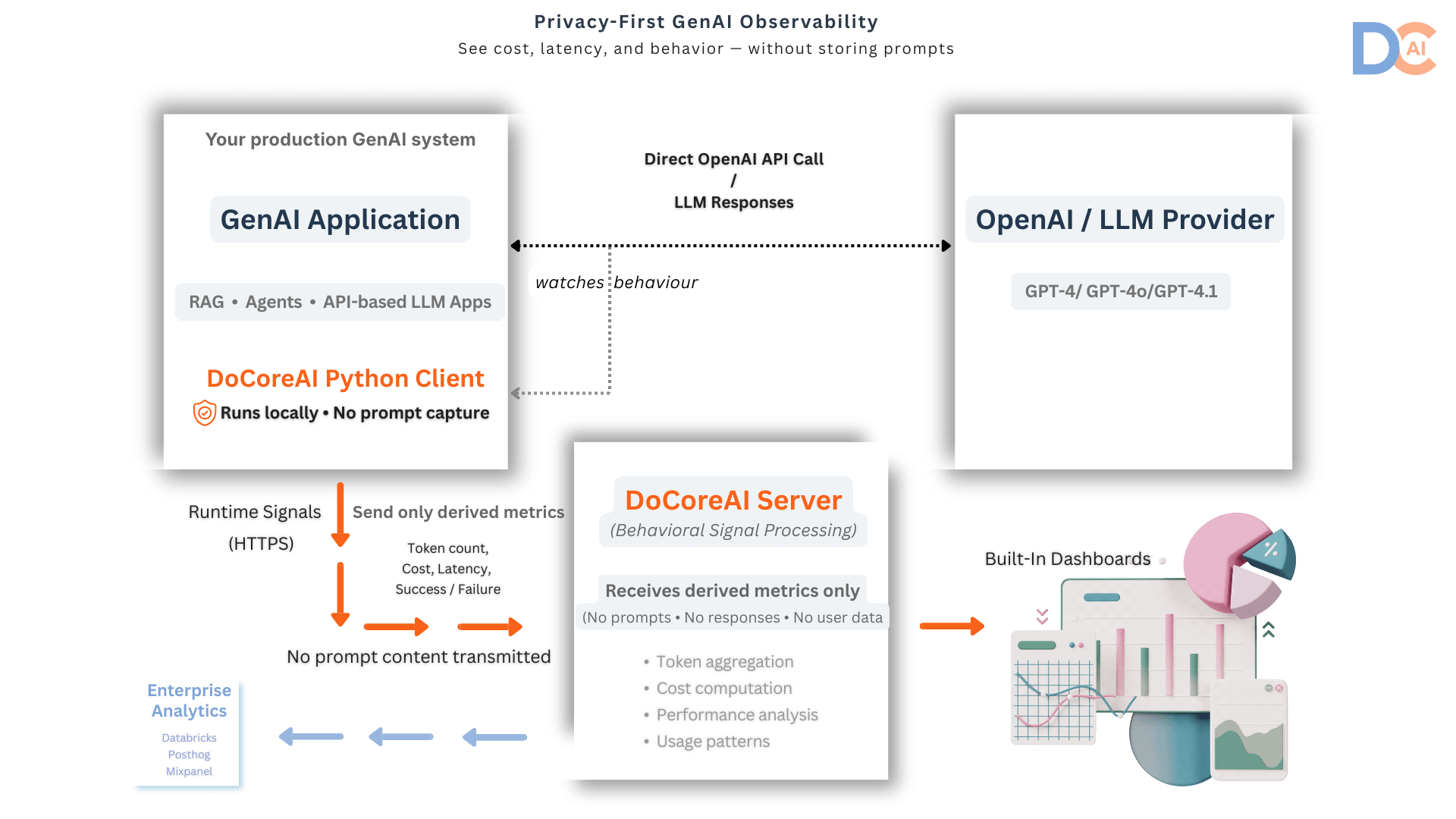

Where DoCoreAI Runs

DoCoreAI runs alongside your GenAI application.

No prompts or responses leave your environment.

Only behavioral telemetry is extracted.

Tokens • Latency • Retries • Model usage • Cost signals

System Behavior Explained

Why GenAI Teams Lose Visibility After Production

A short technical explainer showing where the blind spot appears — and what signals teams can see immediately.

- No prompt capture

- No proxying traffic

- No refactoring required

- Runs locally alongside your stack

Signals Teams See Within Minutes

- Token wastage patterns

- Success vs failure execution paths

- Latency distribution (not averages)

- Daily operational GenAI cost

- ROI trends over time

Who This Is For

- Senior engineers and tech leads

- AI architects and platform owners

These signals naturally roll up into cost, ROI, and governance views for leadership.

Free plan • No credit card • No prompts stored