Enterprise AI Enablement

A Practical Path to Safe GenAI Adoption — Without Disruption

Many organizations want to use GenAI, but hesitate to move forward — not because of lack of ambition, but because of real constraints around security, governance, reliability, and accountability.

This enablement model is designed for teams who want to explore AI responsibly, understand where it genuinely helps, and move from pilot to production with confidence.

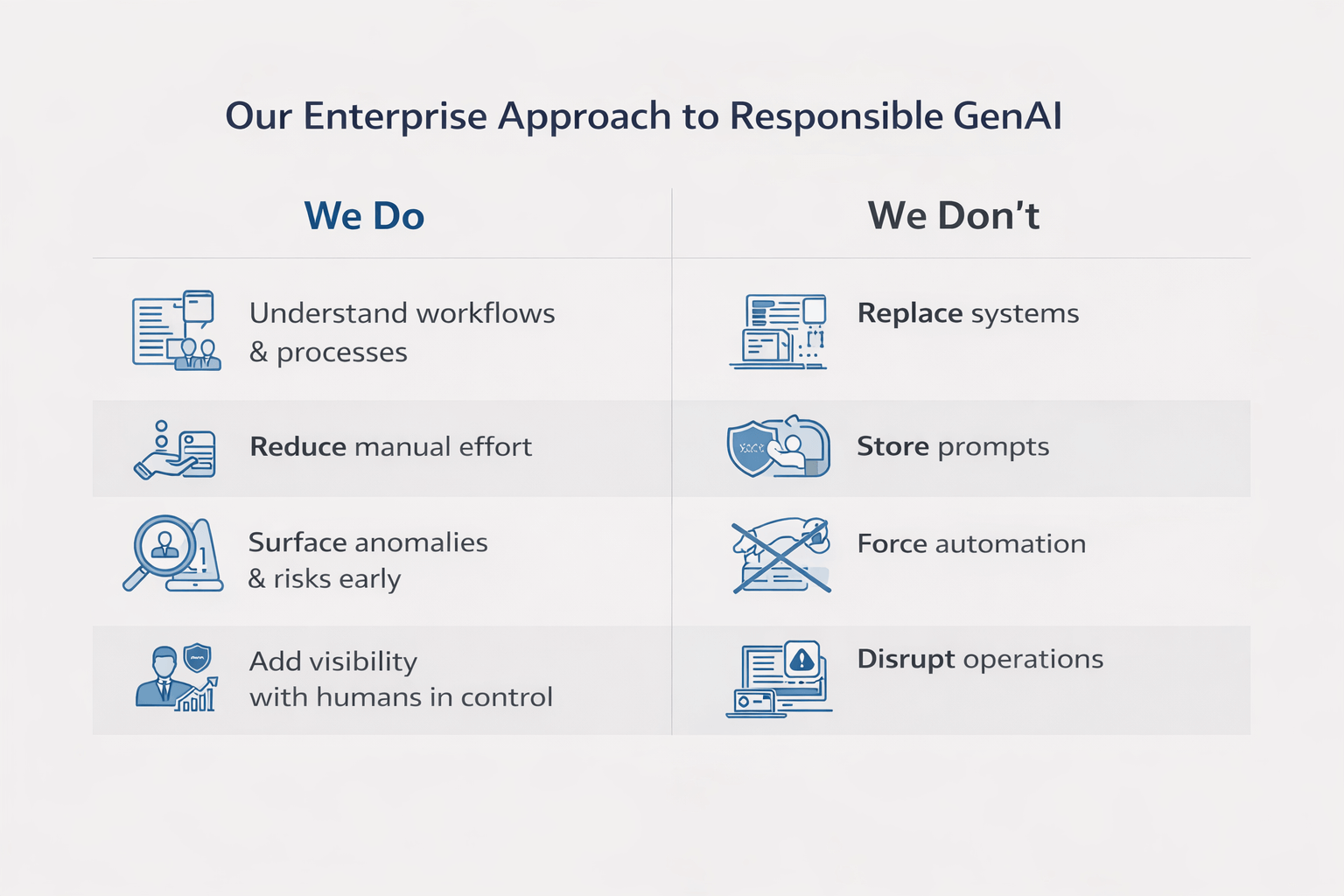

What this engagement is — and what it is not

This IS

- Advisory-led enablement grounded in existing systems and workflows

- Small, controlled GenAI pilots designed to reduce risk, not introduce it

- Human-in-the-loop designs where teams stay accountable and in control

- Governance-aware experimentation aligned with enterprise realities

- Clear explanations of impact, limitations, and operational behavior

This is NOT

- A full-scale AI transformation or system replacement program

- Rapid automation without oversight or accountability

- Model-first experimentation detached from business context

- Black-box AI deployed into production environments

- A sales-led consulting engagement

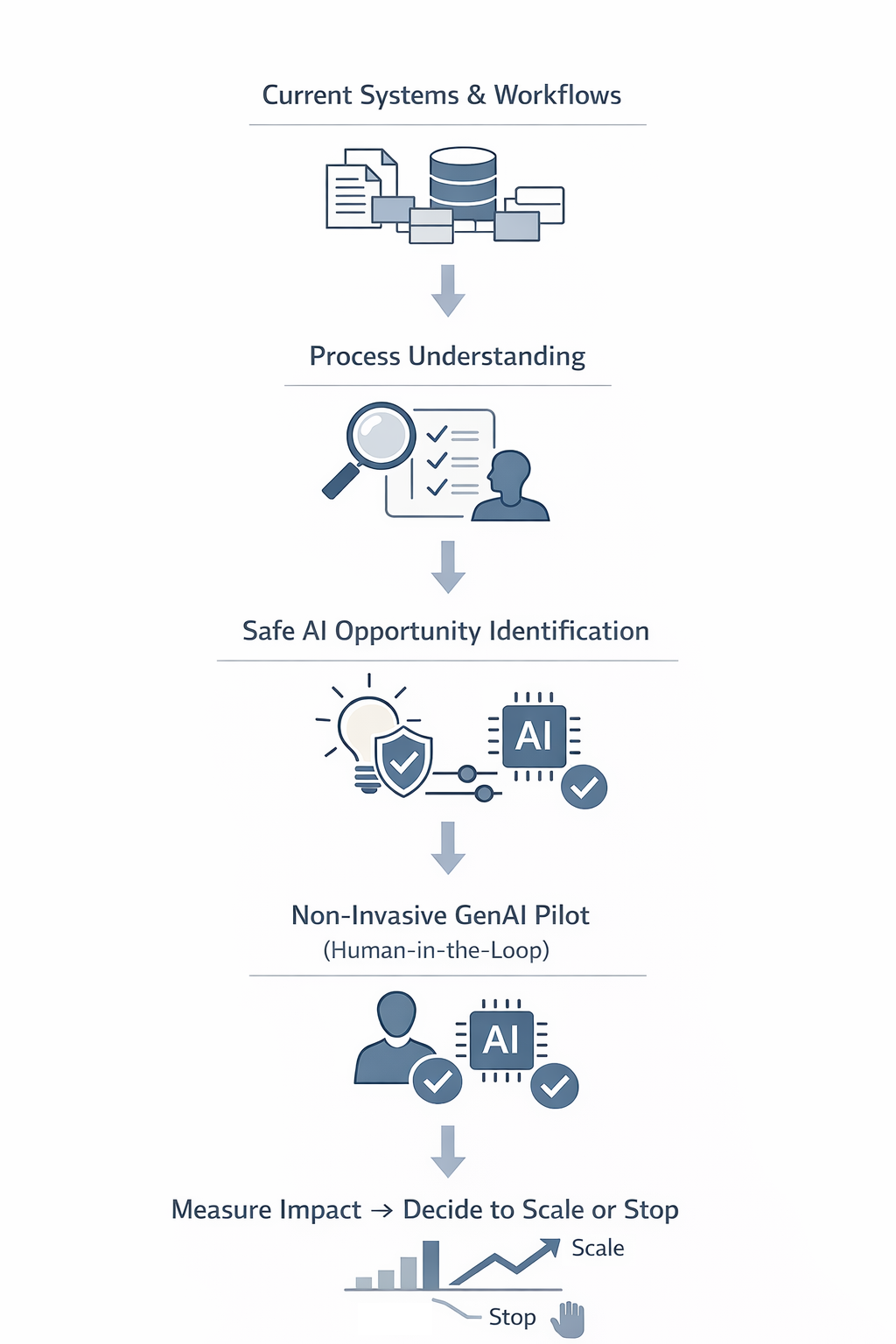

How the engagement typically works

While every organization is different, responsible GenAI adoption usually follows a calm, deliberate progression — designed to learn safely before committing at scale.

1. Understand the current state

Review existing workflows, systems, decision points, and constraints — especially where accountability, risk, or compliance already matter.

2. Identify safe opportunity zones

Pinpoint areas where AI can assist teams without replacing judgment, destabilizing systems, or introducing uncontrolled behavior.

3. Run controlled pilots

Test GenAI in bounded environments with clear visibility, human oversight, and defined exit criteria.

4. Learn before scaling

Evaluate outcomes, operational impact, risk exposure, and team confidence before deciding whether — and how — to proceed further.

Who this approach is designed for

This engagement model is intentionally narrow. It is built for organizations that value control, accountability, and long-term confidence over speed or experimentation for its own sake.

This is a good fit if you…

- Operate regulated, mission-critical, or customer-facing systems

- Need AI decisions to be explainable, auditable, and reviewable

- Are accountable to leadership, regulators, or customers

- Prefer pilot-led learning before committing at scale

- Want AI to assist teams — not replace judgment

This is probably not a fit if you…

- Are looking for rapid experimentation without governance

- Need immediate automation across core systems

- Are optimizing for speed over safety

- Expect AI to replace decision-making responsibility

- Primarily want tooling rather than guidance and structure

Common questions leaders ask before moving forward

Leaders usually start with a few practical questions before moving forward.

Will this disrupt our existing systems?

No. The work is designed to run alongside current workflows, not replace them. Existing systems remain intact.

Do we need to commit to large-scale AI adoption upfront?

No. Engagements start small — focused on learning, validation, and controlled pilots before any scaling decisions.

How is risk handled?

Governance, human oversight, and explainability are built into the approach — not added later.

Will our internal teams stay in control?

Yes. AI is positioned as an assistive layer. Decision ownership remains with your teams.

What does success look like early on?

Clear visibility, reduced manual effort, and informed decisions — not automation for its own sake.

Is this consulting or product-driven?

The work begins advisory-led. Product platforms support execution where appropriate — not the other way around.

Do engagements require onsite work?

Most engagements are designed to work effectively with distributed teams. In certain cases — such as regulated environments or early executive alignment phases — an initial onsite current-state assessment may be useful, followed by remote execution.

How engagements typically unfold

Engagements are designed to work alongside internal teams, providing external perspective and guidance at key decision points — without creating long-term dependency or disruption.

Advisory-led collaboration

Work is guided by an external advisor who helps frame problems, challenge assumptions, and validate decisions — while execution remains with internal teams.

Internal ownership

Teams retain ownership of systems, data, and outcomes. AI remains an assistive layer, and accountability stays within the organization.

Learning before commitment

Decisions to scale, pause, or stop are made only after teams understand real operational impact, risks, and limitations.

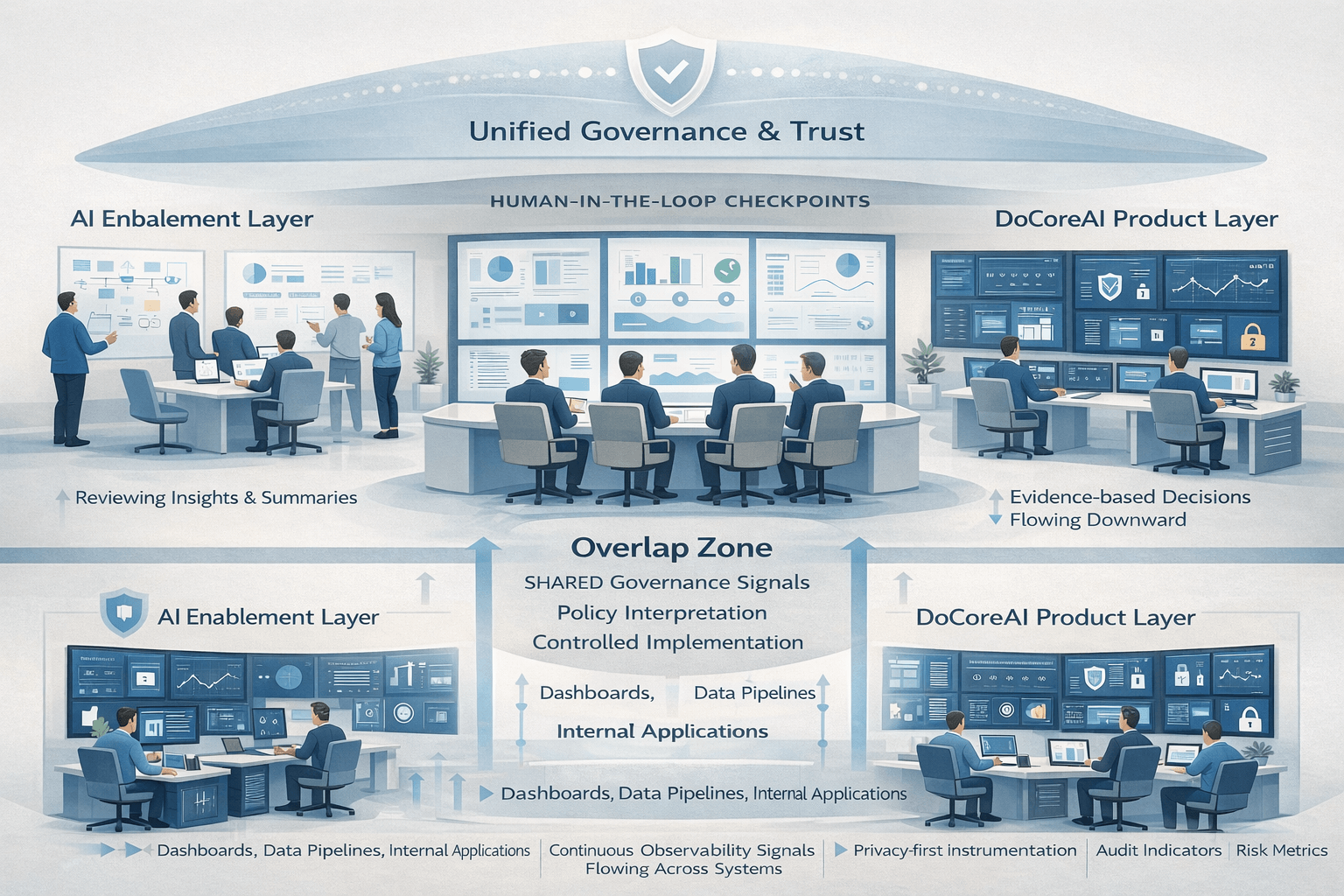

How this work relates to DoCoreAI

DoCoreAI operates as a product company, but this engagement is intentionally advisory-led to ensure GenAI adoption decisions are grounded in governance, not tooling. Its purpose is to help teams understand where GenAI fits, how it behaves inside real systems, and what controls are required before production use.

In some cases, organizations choose to operationalize this approach using DoCoreAI — a privacy-first observability and governance layer designed to support safe GenAI deployment in enterprise environments.

The advisory engagement comes first. Product adoption, if any, follows only when it clearly supports the organization’s governance, security, and operational requirements.